This blog has been inactive for a long time, oddly one of the causes of that is the layout of my house. I have a lot of technology, but I'm a big believer that tech works best when it's unseen and out of the way. So the computers are all hidden away in a back room and my main internet access devices are a smartphone and an iPad mini (with a keyboard at least). So when I want to blog I'm either using the iPad or going to the back room, convenience wins out every time.

The problem is that the Blogger interface really is quite horrible to use on an iPad, and worse, I don't get spellchecking which is a pretty large requirement given my terrible spelling. So it was all just too hard and too annoying and I stopped.

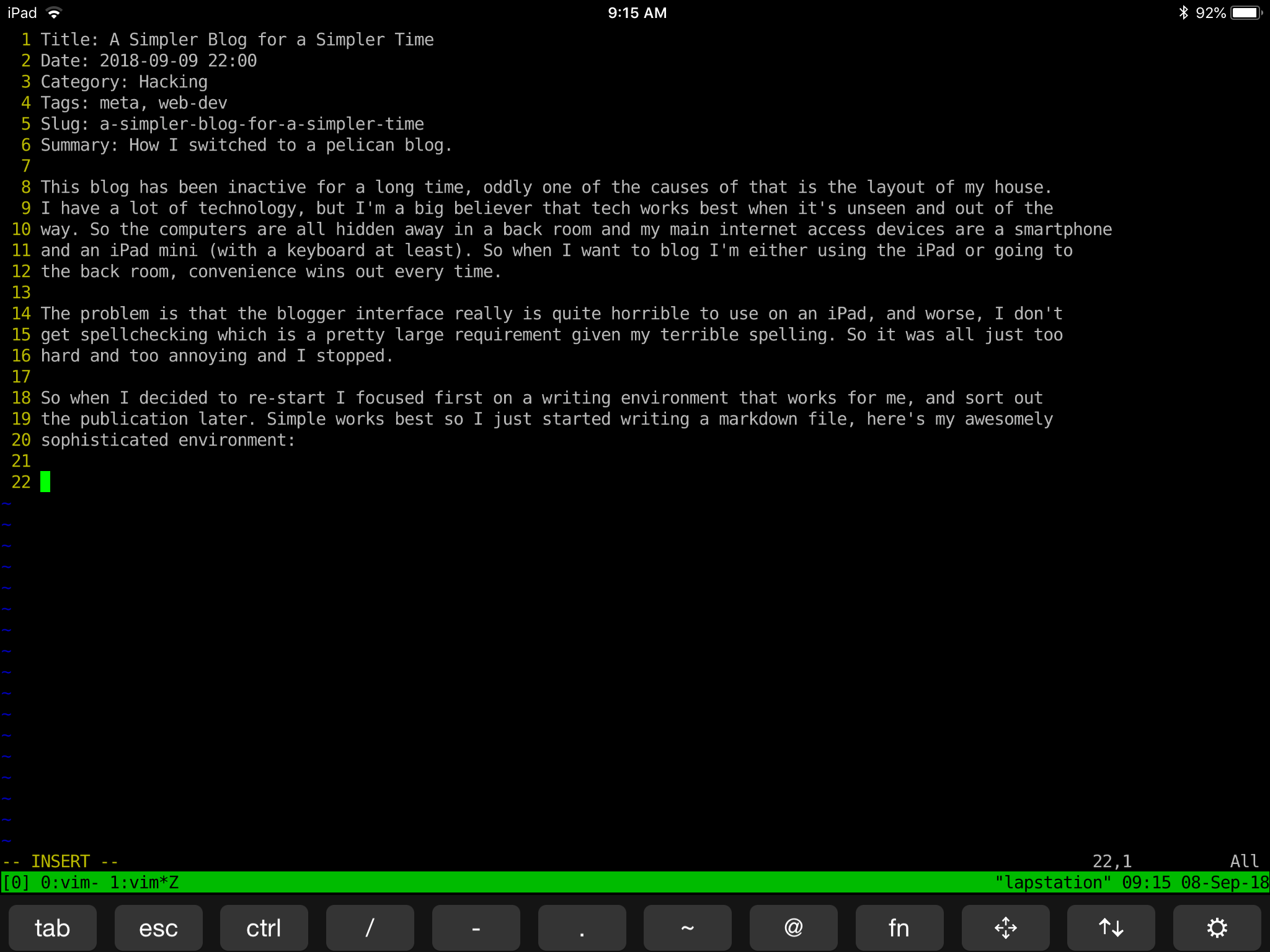

So when I decided to re-start I focused first on a writing environment that works for me, and sort out the publication later. Simple works best so I just started writing a markdown file, here's my awesomely sophisticated environment:

That would be vim, running inside tmux, over ssh, accessed from an iPad. I said "works for me" not "is sensible".

So I sat down and wrote up my spelunking through the performance optimisations in react-redux in markdown and didn't think about how I'd publish. My initial plan was to just generate the HTML representation and copy that into the Blogger interface, that did not end well as it did not place nice with the Blogger styles.

I've been meaning to move off Blogger for a while anyway because of the lack of HTTPS support on custom domains (yes they finally added it, but it's disturbing how long that took given google's general HTTPS stance) so I decided it was time to pull the plug and switch over to a static site generator. After a bit of googling I found Pelican. Pelican is exactly what I was looking for, uses Markdown (which I know) or reStructuredText (which I want to learn) for posts; Jinja2 templates for customization and theming; and it's a nice simple concept that makes sense to me.

Configuring Pelican

Actually getting Pelican up and running was trivial, a quick pip install, run the quick-start script and I was done. But I wanted to keep the existing blog content and, even thought I'm fairly sure nobody actually links to this blog, I wanted to make sure that any links did still work.

Pelican has good support for importing from an atom feed so getting the existing posts in was easy. I had to do a little bit of tidy up of the resulting markdown but workable. Might have been a bigger challenge if I had more posts. I think the RST support may be a bit better.

Maintaining Post URLs

I knew I was going to have to use mod_rewrite at some point, but I wanted to do as much as possible without bringing out that particular hammer.

First challenge, Blogger URLs have the year and month in them e.g. 2018/12/my-awsome-post.html where as by default

Pelican just uses the post slug. Turns out there's a setting for post URLs. So just

adding these 2 lines to my pelicanconf.py gave all my posts Blogger compatible URLs:

ARTICLE_URL = '{date:%Y}/{date:%m}/{slug}.html'

ARTICLE_SAVE_AS = '{date:%Y}/{date:%m}/{slug}.html'

But the year and month URLs were still presenting directory listings. Luckily Pelican can also generate lists of posts in each year or month. So I made those the index pages for the year and month directories:

YEAR_ARCHIVE_SAVE_AS = '{date:%Y}/index.html'

MONTH_ARCHIVE_SAVE_AS = '{date:%Y}/{date:%m}/index.html'

With this in place, going to /2018/ returns a listing of all posts made in 2018. Just what I wanted!

URL Redirections and Error Pages

With the obvious links covered, I wanted to make sure any other URLs that might have been used also keep working. So I needed to discover what other URLs might be in use so I could make sure they still work. Normally I'd just pull these out of the access logs, but I couldn't find a way to get the access logs out of Blogger.

I decided to solve this problem with linkchecker (as an aside, the origonal linkchecker project is abandoned, so the linkchecker you install with pip is woefully out of date). Running link checker against my old Blogger site pulled out a few interesting groups of links to redirect:

- RSS / Atom Feeds

/feeds/posts/default/feeds/posts/default?alt=rss

- Label Searches

/search/label/managing

This is where I decided it's time to bring out the mod_rewrite hammer. Pelican generates atom and RSS feeds in sensible

locations. As with the posts I could have configured Pelican to generate these feeds at the same URL as the Blogger ones,

but I much prefer the Pelican URLs. So instead I put some rewrite rules in my .htaccess file so clients access the old

URLs are redirected to the new ones:

# RSS Feed From Blogger

RewriteCond %{QUERY_STRING} "alt=rss" [NC]

RewriteRule "^feeds/posts/default$" feeds/all.rss.xml [NE,QSD,END,R=301,E=permacache:1]

# Atom Feed From Blogger

RewriteRule "^feeds/posts/default$" feeds/all.atom.xml [NE,QSD,END,R=301,E=permacache:1]

#################################################################

# Caching Headers #

#################################################################

# For the content that sets the right env variables

# Set a long future expire so that it's permanently cached.

Header always set Expires "Wed, 1 Jan 2020 12:00:00 GMT" env=permacache

Because Blogger hosts both of these feeds as the same resource with a query parameter I had to make use of a

RewriteCond but it's really not the complex. Because these have permanently moved I didn't want clients to always

check the old URL first. So I used a 301 "permanent" redirect. I also set caching header to allow these redirects

to be cached till 2020 (doesn't seem so far away as it used to).

I handled the label searches in a similar way, redirecting to the equivalent tag page. Turns out I wasn't using the Blogger tags very well, so I took the opportunity to clear that up and mapped some of the old tags to newer tags that make more sense. This gave me the following block of redirects for the labels:

RewriteRule "^search/label/git hg source-control" tags/source-control.html [NE,QSD,END,R=301,E=permacache:1]

RewriteRule "^search/label/linux rambling" tags/linux.html [NE,QSD,END,R=301,E=permacache:1]

RewriteRule "^search/label/managing" tags/managing.html [NE,QSD,END,R=301,E=permacache:1]

RewriteRule "^search/label/xvfb screenshot capture" tags/documentation.html [NE,QSD,END,R=301,E=permacache:1]

The URLs I've Missed

With all that in place I'd probably got most of the URLs that people would actually be looking for. But I wanted to at least attempt a sensible response to anything I missed. Looking at the rest of the URLs that came from linkchecker I came up with the following possibilities:

- Someones trying to find a label I didn't know about -> Send them to the tags page so they can find the right tag

- Someones trying to execute a search for content -> Send them to a google search of the blog

- Someone hits some other special variant of the search URL -> send them to the archive of all posts so they can find whatever it was they were after

A few more redirects covered all those cases, these of course had to be after the more specific redirects I'd already created so that the more specific redirects would be used in preference to these fall back options:

# Send any unknown labels to the full tags list

RewriteRule "^search/label" tags/ [NE,QSD,END,R=301,E=permacache:1]

# Redirect search into a Google search of the site

RewriteCond %{QUERY_STRING} "^q=(.*)" [NC]

RewriteRule "^search" "https://google.com/search?q=site\%3Ablog.technicallyexpedient.com+%1" [NE,QSD,END,R=302,E=permacache:1]

# Redirect any other search URLs to the archive

RewriteRule "^search" archives.html [NE,QSD,END,R=301,E=permacache:1]

The only interesting one here is the redirection into google for searches. I used the RewriteCond to extract the search

terms then add them to the end of a search that's constrained to results from this site.

As a final fallback I wanted a "nice" 404 page in case I had missed any other deep links. Pelican

suggests using hidden pages so I created my nice error page. Complete with a link to the archives.

Then registered that as the page to return for 404 errors with the following directive in my .htaccess.

ErrorDocument 404 /errors/404.html

Sitemap

One final thing I noticed going through the linkchecker URLs was a sitemap. Pelican doesn't generate a sitemap, but it seems like a good thing for a blog to give search engine a hint about what has changed and when. To generate the sitemap I used Pelican's template pages. This feature is not terribly well documented but it allows you to produce content using Jinja2 templates with access to all the articles and other Pelican objects.

So I created this template in content/src/sitemap.xml which loops over all the articles and pages to creates an

xml sitemap.

<?xml version='1.0' encoding='UTF-8'?>

<urlset xmlns="http://www.sitemaps.org/schemas/sitemap/0.9">

{% for article in articles + pages %}

{% set date = article.metadata.modified if article.metadata.modified else article.date %}

<url>

<loc>{{ article.url }}</loc>

{% if date %}

<lastmod>{{ date.strftime("%Y-%m-%dT%H:%M:%SZ") }}</lastmod>

{% endif %}

</url>

{% endfor %}

</urlset>

I then registered this as a template page in my pelicanconf.py so that Pelican executes this template and publishes

the result as the sitemap.

TEMPLATE_PAGES = {

'src/sitemap.xml': 'sitemap.xml',

}

The sitemap must also be referenced from a robots.txt file. I didn't actually need to template this but I did want

to control where it is in the output folder. So I used the same template pages approach.

A Good Project is Never Quite Done

So with all that done I cut over and now you can read this post, and the previous one. All in nice simple static HTML.

Of course there are a few more things I want to tidy up, but this is good enough for now.

I'm still using the default theme, I find this nice and readable, but there are a few things I want to move around the page and I'd like to update the colours a bit.

I also want to move away from all the page URLs having the .html extension as I'd prefer that to be a mime-type

negotiation rather than a part of the URL.

Finally, much as messing with these settings is fun, I really should write some more content. Ultimately this is why I've decided to go-live with the current state. I can now start to publish the posts I write in markdown.

Comments !